This is a guest blog entry by Sam Gregory, Program Director for WITNESS. Originally posted on WITNESS’ blog.

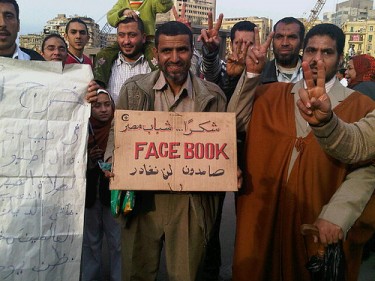

Protester's placard thanking youth of Egypt and Facebook (via Twitter user @richardengelnbc, and now widely re-posted online)

The successful nationwide organizing and subsequent protests in Egypt to oust the 30-year regime of President Hosni Mubarak have in part been facilitated by Facebook. But as media and technology commentators and human rights activists alike are noting, using Facebook for activism is fraught with risks.

Facebook’s insistence that its users use their ‘real identity’ when signing up – and deleting accounts and groups that do no comply – makes it difficult for human rights activists needing to work anonymously or pseudonymously. And it makes it easier for governments to track not only individuals but also their networks.

The risks that affect activists using Facebook have their counterparts in video too. At the same time as the use of video has become more widespread in human rights work, the risks associated with shooting and circulating video, whether by professional human rights advocates or citizen activists, have become equally apparent.

Some of the most notable and publicized examples include the Saffron Revolution in Burma, when intelligence agents scrutinized citizen-shot photographs and video footage to identify demonstrators and bystanders. During the post-election protests in Iran, the government crowd-sourced identification of protesters via facial pictures grabbed from YouTube. And then there is Asmaa Mahfouz’s recent experience in Egypt. Asmaa, whose video blog (below) was one of the alleged catalysts of the January 25th protests, received threats from pro-Mubarak supporters.

With human rights documentation and organizing increasingly taking place via video, and video becoming the primary communication mode online, how are people enabled to make purposeful choices about when they speak out and what degree of anonymity they hold onto for themselves? At WITNESS, we’ve been thinking this in terms of facilitating choices about ‘visual privacy’ and ‘visual anonymity’. And what role, if any, should service providers like Facebook and YouTube play in enabling their spaces for those engaged in human rights work, particularly with video?

In this post, I highlight some new dilemmas of privacy and anonymity related to the increasing ubiquity of human rights video. These themes have emerged during the course of research for a report for our Cameras Everywhere initiative, which we’ll publish next month. The report, drafted with Sameer Padania, formerly of WITNESS, is based on interviews with leading experts in human rights, technology, media and policy-making.

But given the debate that’s happening now about the relationship between the major technology platforms and human rights, we’ve decided to share with you today some relevant parts of the report. We’ll share thoughts today on issues related to anonymity, privacy and freedom of expression and provide some initial recommendations, drafted with Sameer (and which you’ll find at the end of this post), as a contribution to the discussion.

Freedom of Expression, Privacy and Anonymity: Grounding in Human Rights Principles

Everyone has the right to freedom of opinion and expression; this right includes freedom to hold opinions without interference and to seek, receive and impart information and ideas through any media and regardless of frontiers. – Universal Declaration of Human Rights (UDHR), Article 19

No one shall be subjected to arbitrary interference with his privacy, family, home or correspondence. – UDHR, Article 12

Anonymity is very much a part of the right to free speech. International human rights law addresses the right to free expression and exchange of information, as well as freedom of association in Article 19 of the UDHR (see above), and also in Article 19 of the International Covenant on Civil and Political Rights (ICCPR), which adds that restrictions on this right “shall only be such as provided by law and are necessary: (a) For respect of the rights or reputations of others; (b) For the protection of national security or of public order (ordre public), or public health and morals.” Further international declarations on the rights of human rights defenders also emphasize the capacity to disseminate and receive information on human rights topics.

Complementary to rights of freedom of expression is the right to freedom from arbitrary and unlawful interference with one’s privacy and correspondence, recognized both in Article 12 of the UDHR and in Article 17 of the ICCPR. The right to privacy is usually understood to include both the individual’s right to a zone of autonomy within a “private sphere” such as the home, as well as in respect to personal choices within the public sphere. Much discussion around online Internet privacy focuses on the security of personal data and personal identity.

Critical to an active right to both free expression and to privacy is the right to communicate anonymously. Of course, this is not an absolute right – after all, anonymity can also be used, for example to cover criminal activity. However the active presence of options to have anonymity and no a priori restrictions on anonymity enables freedom of expression and supports the right to privacy.

The Right to Communicate Anonymously: Moving from Data to Video

Most contemporary discussions around anonymous communication on the Internet focus on the data protection side. They focus on options for encryption or for using proxy server and circumvention approaches like Tor to conceal both the person communicating and the data being transmitted. These conversations are often exemplified in the handover of user information to repressive governments (for example, Yahoo in China providing the details of journalist Shi Tao) or to governments’ tracking and accessing users’ personal data and communications. Additionally, there is also a tendency to assert that privacy is a thing of the past online and that societal trends and social norms are moving away from assumptions of privacy and anonymity (see for example, contentious public statements made by Mark Zuckerberg, CEO of Facebook).

But in the case of video (or photos), a largely unaddressed question arises. What about the rights to anonymity and privacy for those people who appear, intentionally or not, in visual recordings originating in sites of individual or mass human rights violations?

Consider the persecution later faced by bystanders and people who stepped in to film or assist Neda Agha-Soltan as she lay dying during the election protests in Iran in 2009. People in video can be identified by old-fashioned investigative techniques, by crowd-sourcing (as with the Iran example noted above and shown below) or by face detection/recognition software. The latter is now even built into consumer products like the Facebook Photos, thus exposing activists using Facebook to a layer of risk largely beyond their control.

Here the current methods of protecting privacy are largely inadequate. The IP address from which you send a video is concealed (for example using Tor), yet the person in the video is quickly identifiable with facial recognition software. Often the metadata of location and creator is embedded in the image. And there are no ready options either in the capture of footage or the upload options to video sharing and social media platforms to better anonymize or conceal the identity of those who speak out, or those who are accidentally caught in ‘incriminating’ circumstances. There are few options to preserve what WITNESS has been thinking of as ‘visual privacy’ and better bound the choices we make about protecting our personal visual identity or holding onto ‘visual anonymity’.

Visual anonymity or visual privacy may sound like a contradictions in terms, but people often wish to speak out and to ‘be seen’ while at the same time concealing their face and identifying surroundings. Conversely, people caught in the background of a video may be unaware they are even being filmed in that moment and have no option to protect themselves. This is particularly true outside of mass protest settings where the wave of group solidarity may overwhelm any sense of personal privacy. But imagine you are someone speaking out from a far more marginalized position, for example, a gay person in Uganda, or a rural activist in Mexico. As video increasingly displaces text as the primary mode of online communication, the need for options for visual anonymity will only become more important.

Any steps to protect human rights activists, as well as victims and survivors of human rights violations must initially be grounded in the knowledge and agency of individuals themselves. Whether filming or being filmed, people themselves can take proactive steps at the moment of filming or before they upload in order to protect themselves. WITNESS has blogged extensively about this in the past in this post from our blog series with YouTube, and provides a series of tips in our training materials (PDF). We are also developing a mobile phone application that enables better anonymization of on-the-fly of visual images. However, I’d like to focus the rest of this post on the role that online and mobile service providers can play in terms of supporting new forms of anonymity.

Online Service Providers and Mobile Networks Set Many Parameters

Some of the dilemmas of ’real name’ identity and ‘visual anonymity’ come together when we consider that so much of social justice advocacy in social media and online video spaces occurs in in spaces that are ‘public spaces’ only to the extent that their corporate owners permit it. As the internet researcher Ethan Zuckerman has put it:

Hosting your political movement on YouTube is a little like trying to hold a rally in a shopping mall. It looks like a public space, but it’s not – it’s a private space, and your use of it is governed by an agreement that works harder to protect YouTube’s fiscal viability than to protect your rights of free speech.

Online service providers like Facebook and YouTube are private spaces that must largely prioritize as friction-free a user interface as possible. This approach may be at odds with important considerations with human rights content, such as issues of anonymity, consent, and contextualization (other potential concerns are summarized in this post from the Transmission network). In general, the human rights user has been de-prioritized as a consumer and user-category (and indeed it is a minor one in terms of relative quantity) in relation to other user-scenarios in these mass-public platforms – if it is acknowledged as a user-scenario at all.

Yet any progress in addressing human rights questions must also be informed at least by dialogue with the technology providers of services, hardware and software; online and in the mobile arena, both from within the closed, proprietary sector and the world of open video.

These online and mobile service providers dominate too much of the space and – as we see from Egypt – are used for upload and sharing by too many of the grassroots activists and citizen documentors creating human rights video to be ignored as key actors. These providers have the ability to further support in this respect or at least not actively hinder, and they can’t simply be ignored in favor of using niche or specialized spaces that seem more immediately aligned either ideologically, practically or in terms of their non-corporate ownership or content focus with traditional human rights advocacy – but that have correspondingly small, niche communities.

Next month we’ll publish our summary of recommended policy and practical steps that creators, human rights advocates, technologists (online and mobile, software and apps-developers, service-providers and hardware-makers), funders and policy-makers can take to mitigate risks in the use of technology (and particularly visual technologies) in human rights work, and how the overall environment for this work can be strengthened.

In the meantime, here’s a preview of the recommendations for online and mobile service providers. We’ll expand on them further next month in the report.

Recommendations for Online and Mobile Service Providers

There are three sets of changes – to policies, to functionality and to editorial content – that technology companies enabling the creation or sharing of video could make right away, and that would have a significant normative ripple effect. Online and mobile service providers should, in the first instance:

1. Change key usage and content policies (including those covering apps) to include specific reference to human rights:

- Create a “human rights” content category, and build a specific content review workflow (including an assessment of informed consent where appropriate) to deal with content tagged and flagged as such. This workflow should also look at account deactivation requests related to human rights content, or to anonymous or pseudonymous usage of sites and platforms.

- Conduct a human rights impact assessment of existing site policies, including mobile products, in consultation with relevant stakeholders, and make public some or all of the findings, along with recommendations for modifications.

- Participate in wider initiatives to develop, share and refine ethical codes or codes of conduct for online, mobile and ubiquitous video. These guidelines should specifically address human rights edge-cases, but will likely be applicable to much broader general user use-cases.

2. Develop or incorporate tools and technologies into core functionality that enable both human rights activists and general users to exercise greater control over visual privacy:

- Build “visual privacy” checks (including masking geolocational and EXIF data) as well as standard privacy checks into product design, development and marketing workflows, drawing on risk scenarios outlined through human rights impact assessments.

- Follow the principle of “privacy by design”, or for products already in circulation, “privacy by default”.

- Build tools enabling users to selectively blur faces, voices, specific words and to use other relevant anonymization/privacy protection techniques directly at the point of upload (for platforms or social networks) or acquisition (for hardware or mobile apps)

- Build human rights-relevant nudges (e.g. related to addition of context, protection of identity, consent) into the user flow for any content categorized as “human rights” or tagged with human rights-related tags.

- Provide links at appropriate points in the mobile and online user flow to downloadable, mobile-ready video guides supporting users to deal better with (informed) consent, protecting safety and security of those filmed and those filming, and vicarious trauma for those filming, and those watching. Promote these guides where appropriate.

3. Create officially-supported spaces in relevant products and in mobile and online settings, for curation and discussion of online content from a human rights perspective, to strengthen user and broader public understanding of human rights in the digital era

- Curate human rights-related video content selected by appropriately qualified, trained or experienced staff.

- Discuss transparently key cases relating to inappropriate content or account take-down requests in human rights situations, from government requests, and other related and emerging scenarios.

- Announce and highlight changes to site, mobile or product policies that specifically address human rights and privacy vulnerabilities or concerns raised in human rights impact assessments or through other means.

- Discuss editorial decisions that illuminate human rights content guidelines (described under #1).

We look forward to your feedback on our in-depth data and recommendations in early March.

Thanks to Sameer Padania for his additional editorial and substantive input on drafting this blog, and to Matisse Bustos Hawkes for her editing.